Extract from Amazon Web Service Evangelist Jeff Barr's

CloudSearch blog post for more information about how you can start searching in an hour for less than $100 a month...

Continuing along in our quest to give you the tools that you need to build ridiculously powerful web sites and applications in no time flat at the lowest possible cost, I'd like to introduce you to

Amazon CloudSearch. If you have ever searched

Amazon.com, you've already used the technology that underlies CloudSearch. You can now have a very powerful and scalable search system (indexing and retrieval) up and running in less than an hour.

You, sitting in your corporate cubicle, your coffee shop, or your dorm room, now have access to search technology at a very affordable price. You can start to take advantage of many years of Amazon R&D in the search space for just $0.12 per hour (I'll talk about pricing in depth later).

What is Search?

Search plays a major role in many web sites and other types of online applications. The basic model is seemingly simple. Think of your set of documents or your data collection as a book or a catalog, composed of a number of pages. You know that you can find the desired content quickly and efficiently by simply consulting the index.

Search does the same thing by indexing each document in a way that facilitates rapid retrieval. You enter some terms into a search box and the site responds (rather quickly if you use CloudSearch) with a list of pages that match the search terms.

As is the case with many things, this simple model masks a lot of complexity and might raise a lot of questions in your mind. For example:

- How efficient is the search? Did the search engine simply iterate through every page, looking for matches, or is there some sort of index?

- The search results were returned in the form of an ordered list. What factor(s) determined which documents were returned, and in what order (commonly known as ranking)? How are the results grouped?

- How forgiving or expansive was the search? Did a search for "dogs" return results for "dog?" Did it return results for "golden retriever," or "pet?"

- What kinds of complex searches or queries can be used? Does the result for "dog training" return the expected results. Can you search for "dog" in the Title field and "training" in the Description?

- How scalable is the search? What if there are millions or billions of pages? What if there are thousands of searches per hour? Is there enough storage space?

- What happens when new pages are added to the collection, or old pages are removed? How does this affect the search results?

- How can you efficiently navigate through and explore search results? Can you group and filter the search results in ways that take advantage of multiple named fields (often known as a faceted search).

Needless to say, things can get very complex very quickly. Even if you can write code to do some or all of this yourself, you still need to worry about the operational aspects. We know that scaling a search system is non-trivial. There are lots of moving parts, all of which must be designed, implemented, instantiated, scaled, monitored, and maintained. As you scale, algorithmic complexity often comes in to play; you soon learn that algorithms and techniques which were practical at the beginning aren't always practical at scale.

What is Amazon CloudSearch?

Amazon CloudSearch is a fully managed search service in the cloud. You can set it up and start processing queries in less than an hour, with automatic scaling for data and search traffic, all for less than $100 per month.

CloudSearch hides all of the complexity and all of the search infrastructure from you. You simply provide it with a set of documents and decide how you would like to incorporate search into your application.

You don't have to write your own indexing, query parsing, query processing, results handling, or any of that other stuff. You don't need to worry about running out of disk space or processing power, and you don't need to keep rewriting your code to add more features.

With CloudSearch, you can focus on your application layer. You upload your documents, CloudSearch indexes them, and you can build a search experience that is custom-tailored to the needs of your customers.

How Does it Work?

The Amazon CloudSearch model is really simple, but don't confuse simple, with simplistic -- there's a lot going on behind the scenes!

Here's all you need to do to get started (you can perform these operations from the AWS Management Console, the CloudSearch command line tools, or through the

CloudSearch APIs):

- Create and configure a Search Domain. This is a data container and a related set of services. It exists within a particular Availability Zone of a single AWS Region (initially US East).

- Upload your documents. Documents can be uploaded as JSON or XML that conforms to our Search Document Format (SDF). Uploaded documents will typically be searchable within seconds. You can, if you'd like, send data over an HTTPS connection to protect it while it is transit.

- Perform searches.

There are plenty of options and goodies, but that's all it takes to get started.

Amazon CloudSearch applies data updates continuously, so newly changed data becomes searchable in near real-time. Your index is stored in RAM to keep throughput high and to speed up document updates. You can also tell CloudSearch to re-index your documents; you'll need to do this after changing certain configuration options, such as

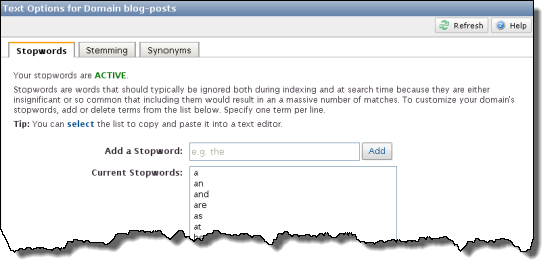

stemming (converting variations of a word to a base word, such as "dogs" to "dog") or

stop words (very common words that you don't want to index).

Amazon CloudSearch has a number of advanced search capabilities including

faceting and

fielded search:

Faceting allows you to categorize your results into sub-groups, which can be used as the basis for another search. You could search for "umbrellas" and use a facet to group the results by price, such as $1-$10, $10-$20, $20-$50, and so forth. CloudSearch will even return document counts for each sub-group.

Fielded searching allows you to search on a particular attribute of a document. You could locate movies in a particular genre or actor, or products within a certain price range.

Search Scaling

Behind the scenes, CloudSearch stores data and processes searches using search instances. Each instance has a finite amount of CPU power and RAM. As your data expands, CloudSearch will automatically launch additional search instances and/or scale to larger instance types. As your search traffic expands beyond the capacity of a single instance, CloudSearch will automatically launch additional instances and replicate the data to the new instance. If you have a lot of data and a high request rate, CloudSearch will automatically scale in both dimensions for you.

Amazon CloudSearch will automatically scale your search fleet up to a maximum of 50 search instances. We'll be increasing this limit over time; if you have an immediate need for more than 50 instances, please feel free to contact us and we'll be happy to help.

The net-net of all of this automation is that you don't need to worry about having enough storage capacity or processing power. CloudSearch will take care of it for you, and you'll pay only for what you use.

Pricing Model

The Amazon CloudSearch pricing model is straightforward:

You'll be billed based on the number of running search instances. There are three search instance sizes (Small, Large, and Extra Large) at prices ranging from $0.12 to $0.68 per hour (these are US East Region prices, since that's where we are launching CloudSearch).

There's a modest charge for each batch of uploaded data. If you change configuration options and need to re-index your data, you will be billed $0.98 for each Gigabyte of data in the search domain.

There's no charge for in-bound data transfer, data transfer out is billed at the usual AWS rates, and you can transfer data to and from your Amazon EC2 instances in the Region at no charge.

Advanced Searching

Like the other Amazon Web Services, CloudSearch allows you to get started with a modest effort and to add richness and complexity over time. You can easily implement advanced features such as faceted search, free text search, Boolean search expressions, customized relevance ranking, field-based sorting and searching, and text processing options such as stopwords, synonyms, and stemming.

CloudSearch Programming

You can interact with CloudSearch through the AWS Management Console, a complete set of

Amazon CloudSearch APIs, and a set of command line tools. You can easily create, configure, and populate a search domain through the AWS Management Console.

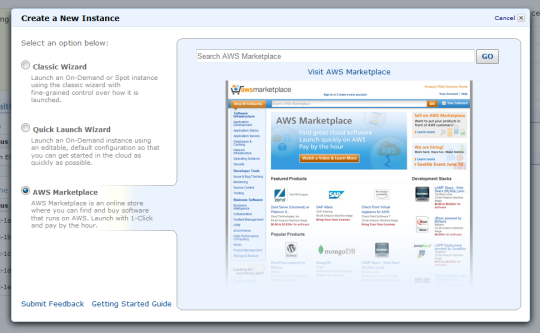

Here's a tour, starting with the welcome screen:

You start by creating a new Search Domain:

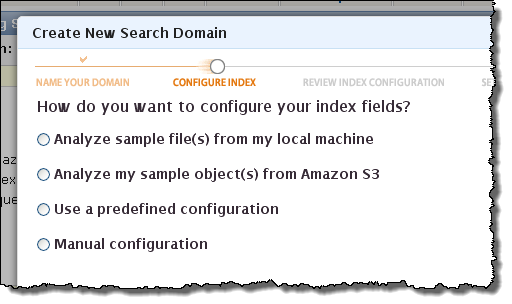

You can then load some sample data. It can come from local files, an Amazon S3 bucket, or several other sources:

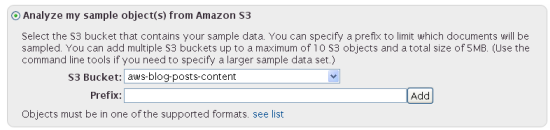

Here's how you choose an S3 bucket (and an optional prefix to limit which documents will be indexed):

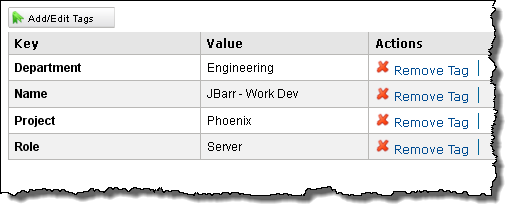

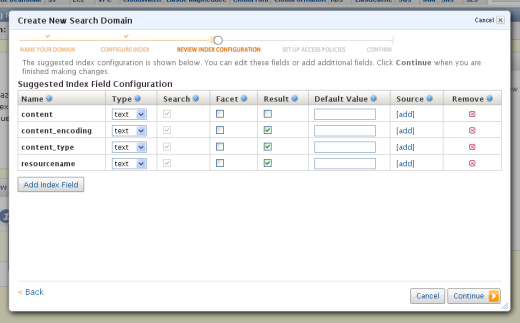

You can also configure your initial set of index fields:

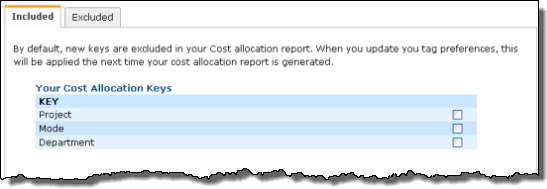

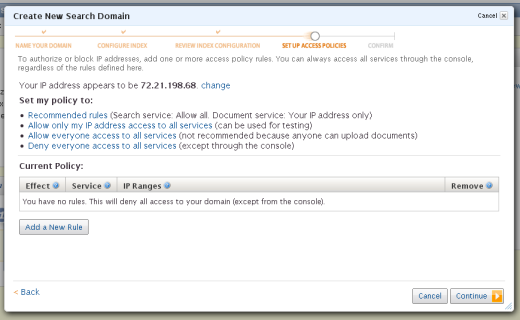

You can also create access policies for the CloudSeach APIs:

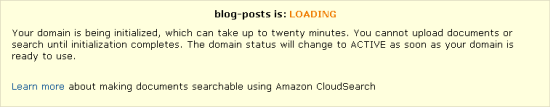

Your search domain will be initialized and ready to use within twenty minutes:

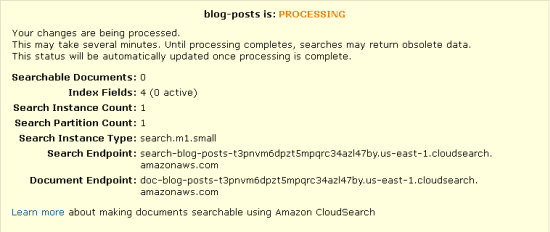

Processing your documents is the final step in the initialization process:

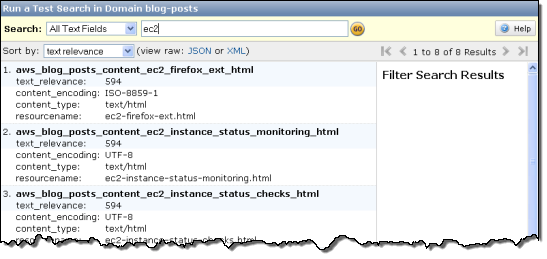

After your documents have been processed you can perform some test searches from the console:

The CloudSearch console also provides you with full control over a number of indexing options including stopwords, stemming, and synonyms:

CloudSearch in Action

Some of our early customers have already deployed some applications powered by CloudSearch. Here's a sampling:

- Search Technologies has used CloudSearch to index the Wikipedia (see the demo).

- NewsRight is using CloudSearch to deliver search for news content, usage and rights information to over 1,000 publications.

- ex.fm is using CloudSearch to power their social music discovery website.

- CarDomain is powering search on their social networking website for car enthusiasts.

- Sage Bionetworks is powering search on their data-driven collaborative biological research website.

- Smugmug is using CloudSearch to deliver search on their website for over a billion photos.

SOURCE