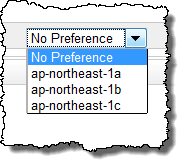

Amazon Web Services (AWS) is expanding in Japan with the addition of a third Availability Zone.

The move means that AWS will most likely be adding more data centers to keep up with the steady demand in service it has had since it first began offering its service in Tokyo 18 months ago.

For people who are not aware of Availability zones and Regions of AWS -

Amazon Web Services serves hundreds of thousands of customers in more than 190 countries.

Currently, AWS has spanned across 8 regions around the Globe.

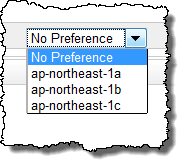

Each region has multiple availability zones.

Each availability zone can encompass multiple data centers.

See a detailed list of offerings at all AWS locations

Extracted below a nice blog post by Jeff:

The move means that AWS will most likely be adding more data centers to keep up with the steady demand in service it has had since it first began offering its service in Tokyo 18 months ago.

For people who are not aware of Availability zones and Regions of AWS -

Amazon Web Services serves hundreds of thousands of customers in more than 190 countries.

Currently, AWS has spanned across 8 regions around the Globe.

Each region has multiple availability zones.

Each availability zone can encompass multiple data centers.

See a detailed list of offerings at all AWS locations

Extracted below a nice blog post by Jeff:

We announced an AWS Region in Tokyo about 18 months ago. In the time since the launch, our customers have launched all sorts of interesting applications and businesses there. Here are a few examples:

- Cookpad.com is the top recipe site in Japan. They are hosted entirely on AWS, and handle more than 15 million users per month.

- KAO is one of Japan's largest manufacturers of cosmetics and toiletries. They recently migrated their corporate site to the AWS cloud.

- Fukoka City launched the Kawaii Ward project to promote tourism to the virtual city. After a member of the popular Japanese idol group AKB48 raised awareness of this site, virtual residents flocked to the site to sign up for an email newsletter. They expected 10,000 registrations in the first week and were pleasantly surprised to receive over 20,000.

Demand for AWS resources in Japan has been strong and steady, and we've been expanding the region accordingly. You might find it interesting to know that an AWS region can be expanded in two different ways. First, we can add additional capacity to an existing Availability Zone, spanning multiple datacenters if necessary. Second, we can create an entirely new Availability Zone.

Over time, as we combine both of these approaches, a single AWS region can grow to encompass many datacenters. For example, the US East (Northern Virginia) region currently occupies more than ten datacenters structured as multiple Availability Zones.

Today, we are expanding the Tokyo region with the addition of a third Availability Zone.

This will add capacity and will also provide you with additional flexibility. As is always the case with AWS, untargeted launches of EC2 instances will now make use of this zone with no changes to existing applications or configurations. If you are currently targeting specific Availability Zones, please make sure that your code can handle this new option.