On 13th August AWS has announced new locations and console support for AWS Direct Connect. Great article by Jeff...

Did you know that you can use AWS Direct Connect to set up a dedicated 1 Gbps or 10 Gbps network connect from your existing data center or corporate office to AWS?

New Locations

Today we are adding two additional Direct Connect locations so that you have even more ways to reduce your network costs and increase network bandwidth throughput. You also have the potential for a more consistent experience. Here is the complete list of locations:

Console Support

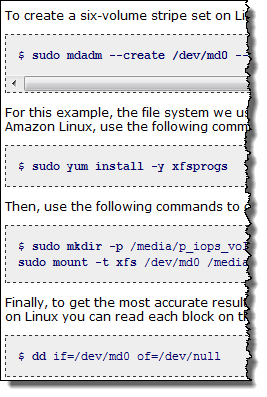

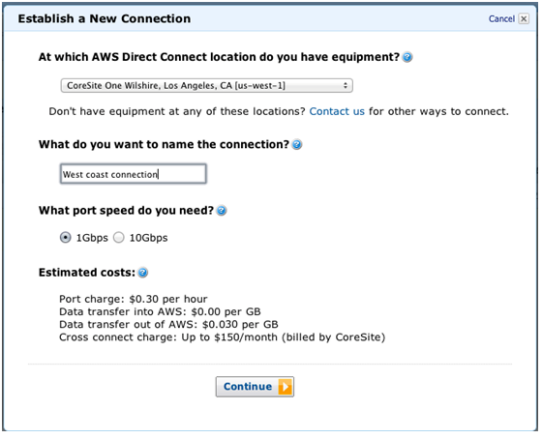

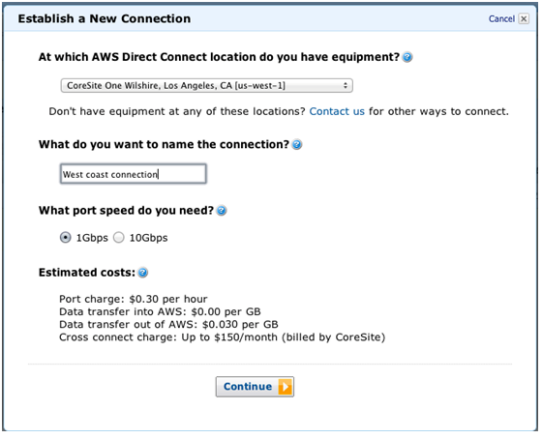

Up until now, you needed to fill in a web form to initiate the process of setting up a connection. In order to make the process simpler and smoother, you can now start the ordering process and manage your Connections through the AWS Management Console.

Here's a tour. You can establish a new connection by selecting the Direct Connect tab in the console:

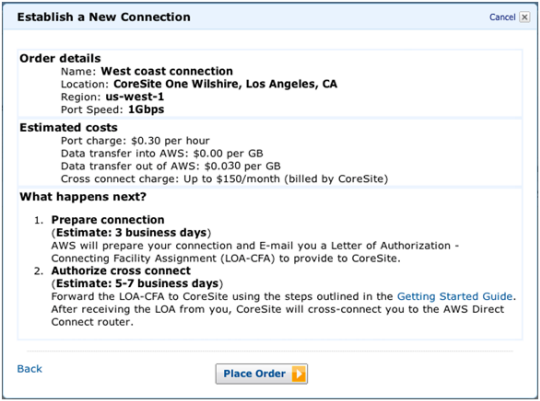

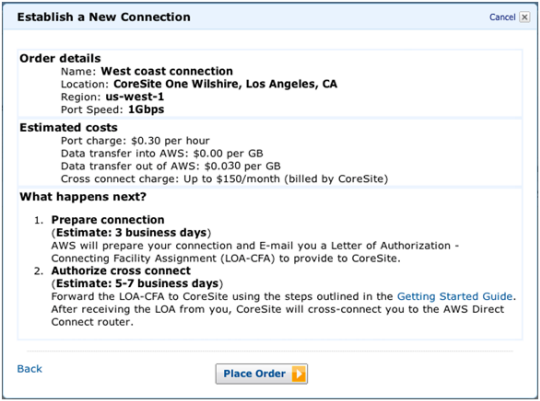

After you confirm your choices you can place your order with one final click:

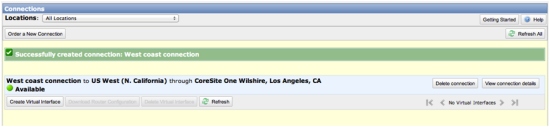

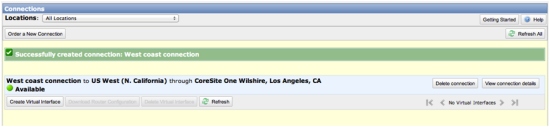

You can see all of your connections in a single (global) list:

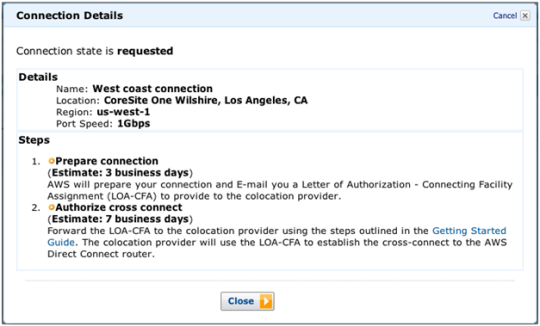

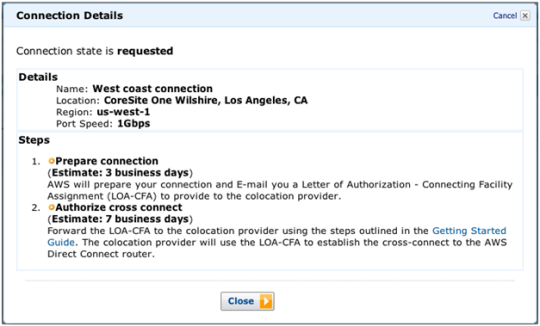

You can inspect the details of each connection:

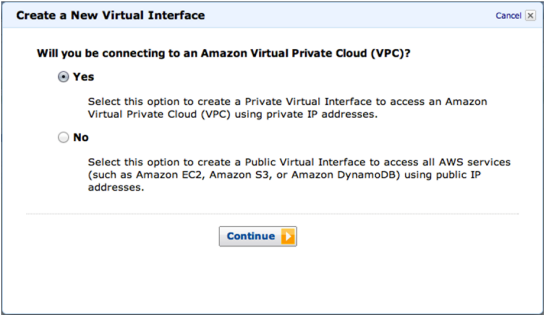

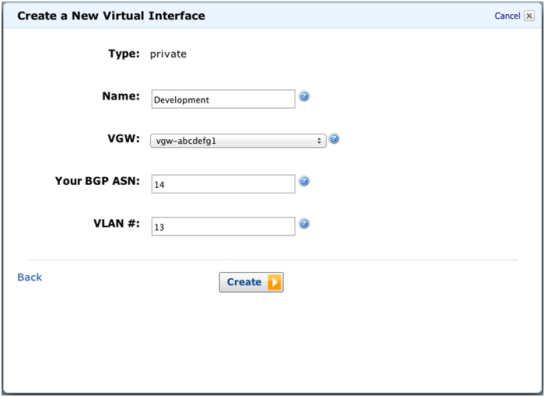

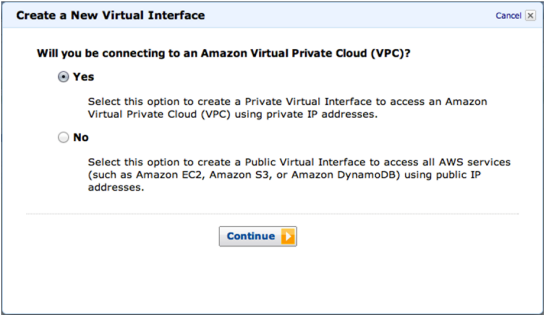

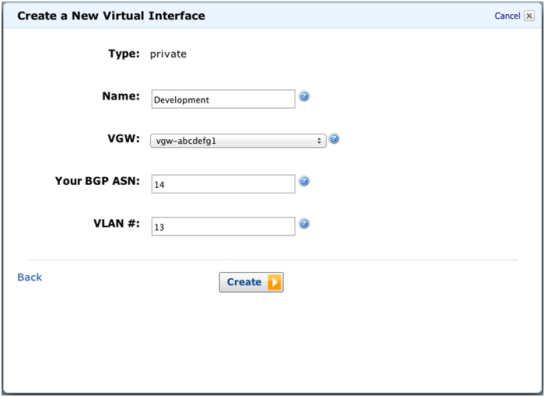

You can then create a Virtual Interface to your connection. The interface can connected to one of your Virtual Private Clouds or it can connect to the full set of AWS services:

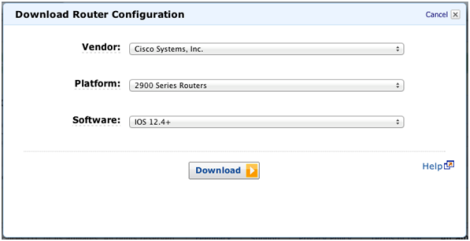

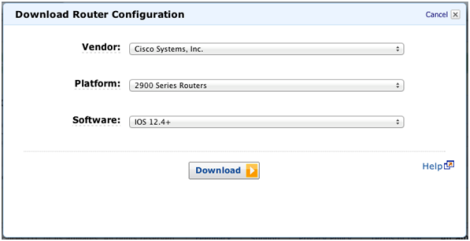

You can even download a router configuration file tailored to the brand, model, and version of your router:

Get Connected

And there you have it! Learn more about AWS Direct Connect and get started today.

SOURCE

Did you know that you can use AWS Direct Connect to set up a dedicated 1 Gbps or 10 Gbps network connect from your existing data center or corporate office to AWS?

New Locations

Today we are adding two additional Direct Connect locations so that you have even more ways to reduce your network costs and increase network bandwidth throughput. You also have the potential for a more consistent experience. Here is the complete list of locations:

- CoreSite 32 Avenue of the Americas, New York - Connect to US East (Northern Virginia). New.

- Terremark NAP do Brasil - Connect to South America (Sao Paulo). New.

- CoreSite One Wilshire, Los Angeles, CA - Connect to US West (Northern California).

- Equinix DC1 – DC6 & DC10, Ashburn, VA - Connect to US East (Northern Virginia).

- Equinix SV1 & SV5, San Jose, CA - Connect to US West (Northern California).

- Equinix SG2, Singapore - Connect to Asia Pacific (Singapore).

- Equinix TY2, Tokyo - Connect to Asia Pacific (Tokyo).

- TelecityGroup Docklands, London - Connect to EU West (Ireland).

Console Support

Up until now, you needed to fill in a web form to initiate the process of setting up a connection. In order to make the process simpler and smoother, you can now start the ordering process and manage your Connections through the AWS Management Console.

Here's a tour. You can establish a new connection by selecting the Direct Connect tab in the console:

And there you have it! Learn more about AWS Direct Connect and get started today.

SOURCE